Performance

Measure your Skill

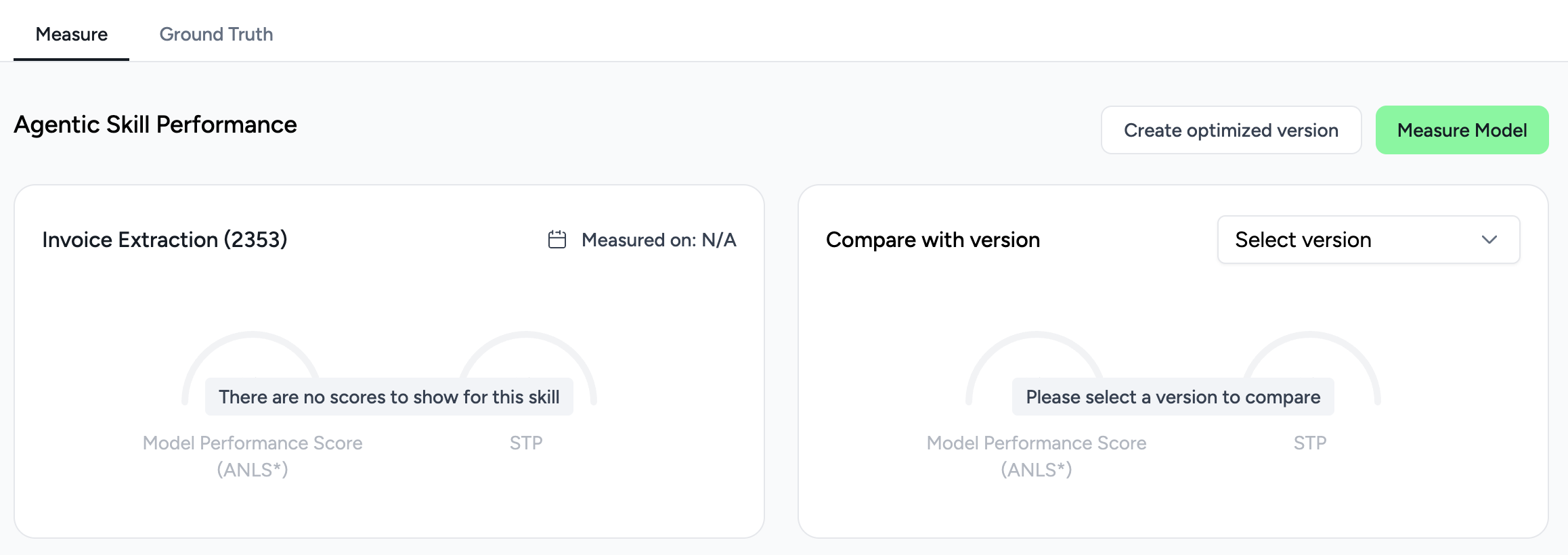

Just go to the Measure tab and click Measure model.

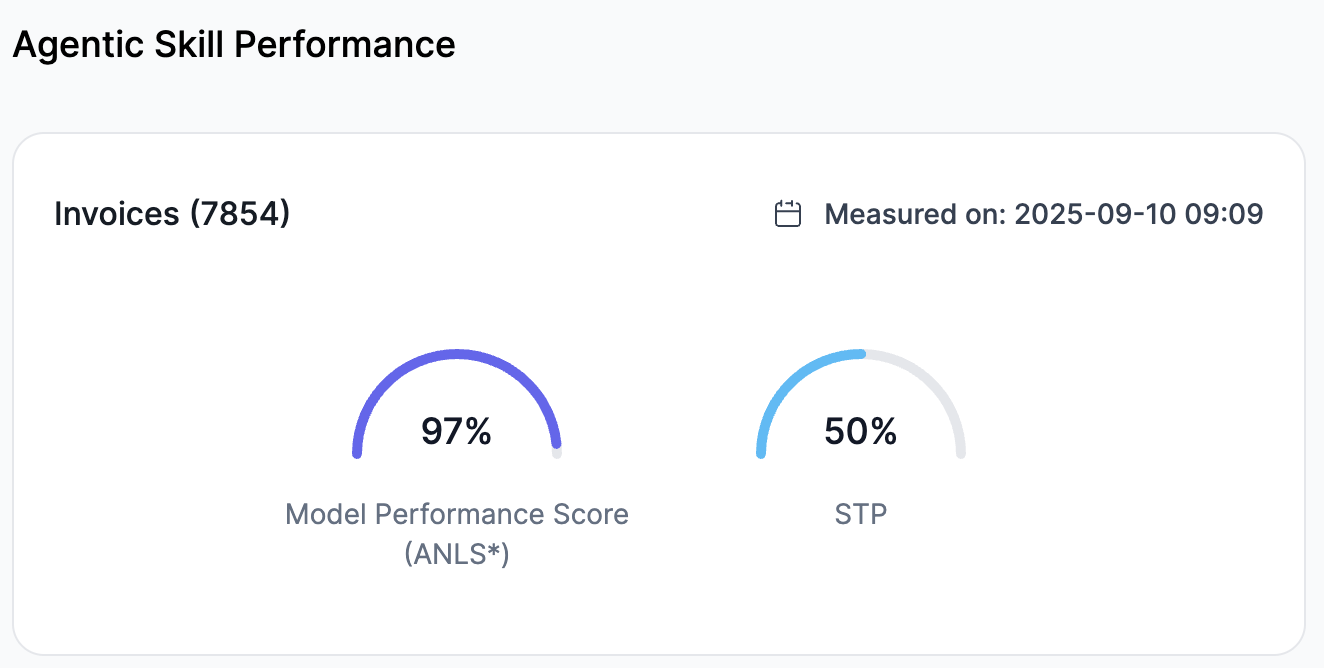

After your skill has been evaluated, the Measure Performance tab will provide you with two key metrics:

Model Performance Score: This score (ANLS*) gives you a single, average number that represents the accuracy of your skill across all fields. It's a quick way to see how well the skill is performing overall.Straight-Through Processing(STP): This metric shows the percentage of documents your skill handled perfectly, with no human intervention required. The goal is to get this number as close to 100% as possible, which ensures your automations can run smoothly without any user corrections.

ANLS* stands for Average Normalized Levenshtein Similarity. It’s a well-known measurement in the Machine Learning and AI environment. It’s a metric that compares text similarity by looking at how many single-character edits (insertions, deletions, substitutions) are needed to transform one string into another.

These metrics are detailed by 2 tables: one with statistics for each document, and another with statistics for each label.

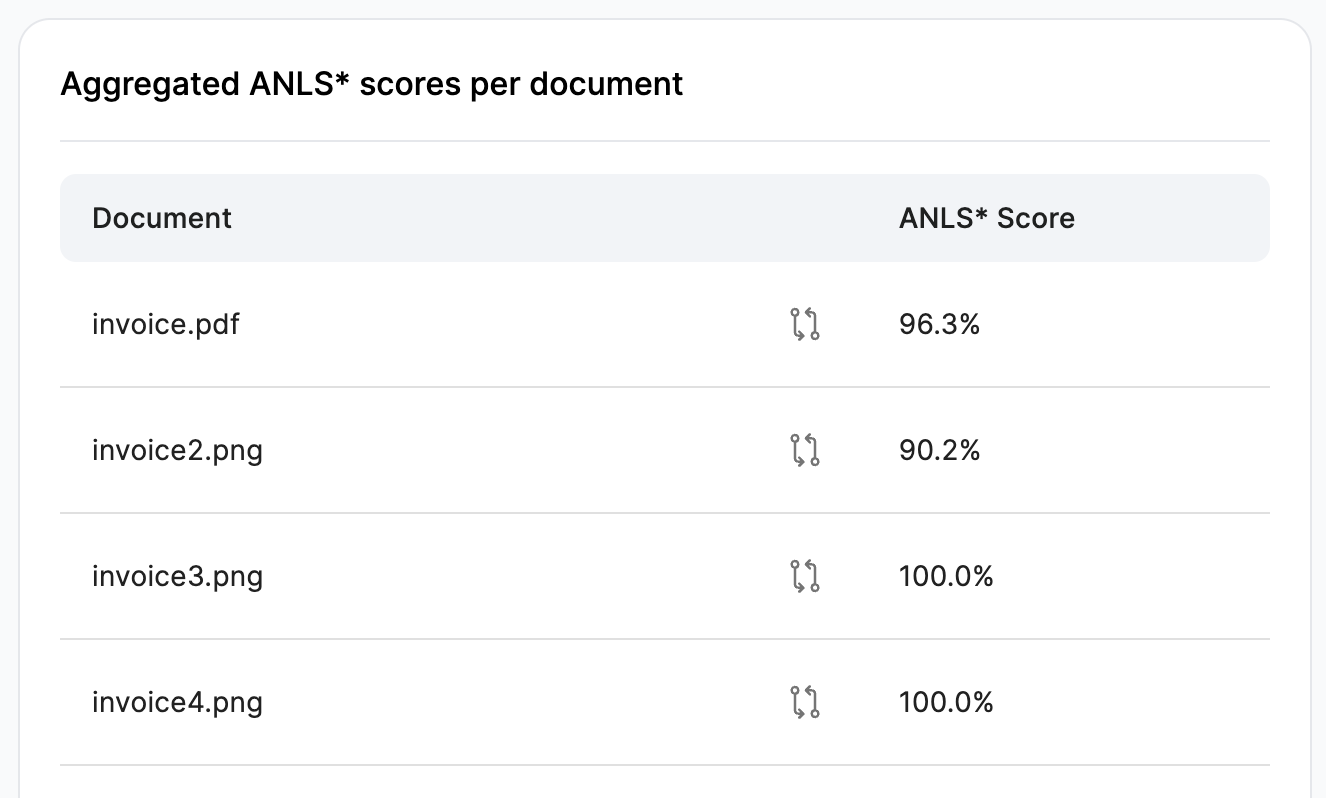

Aggregated ANLS* scores per document

This table lists all the documents you reviewed and provides a score for each one. This score represents the percentage of correctly extracted information by the skill for that specific document.

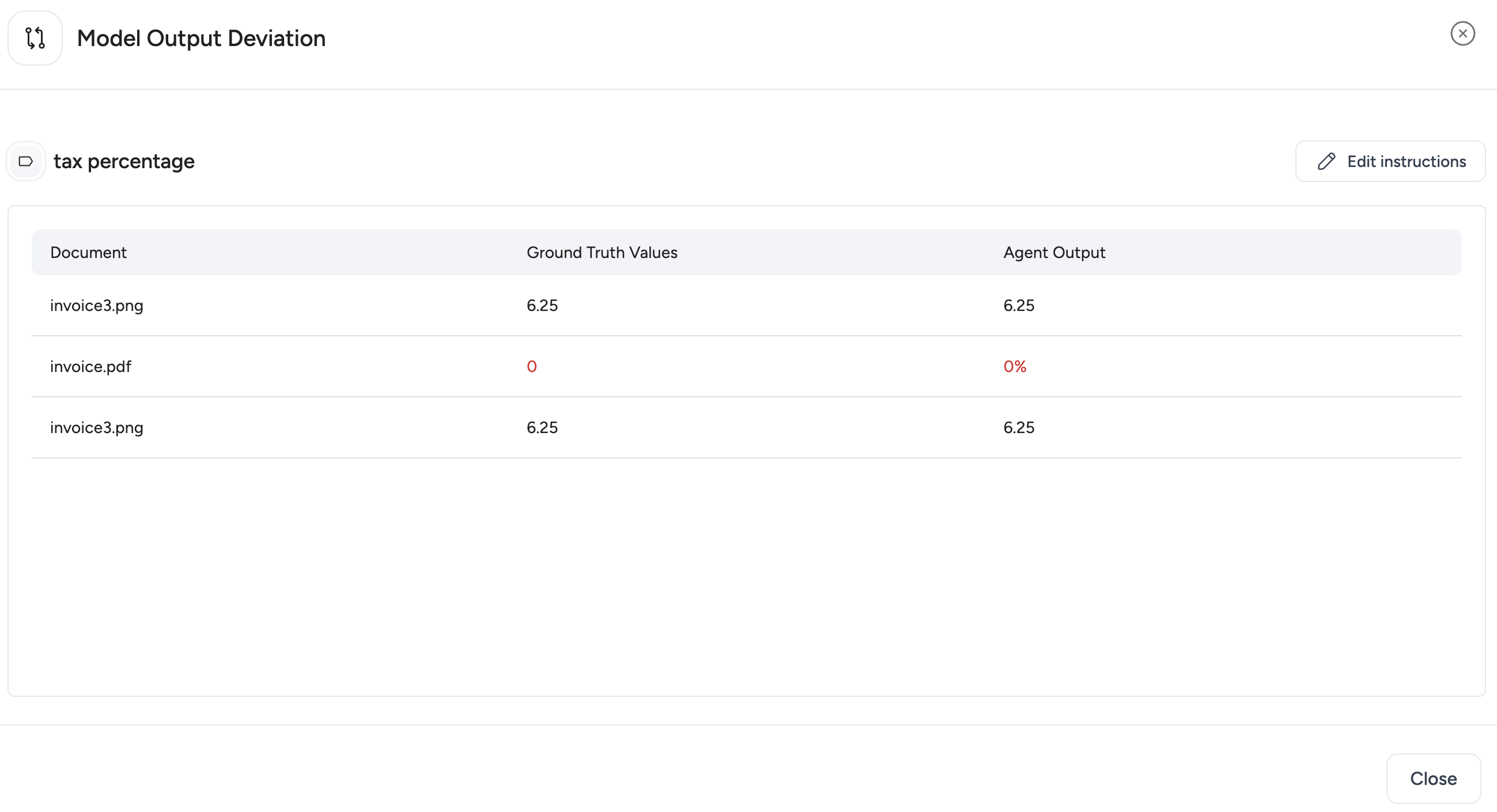

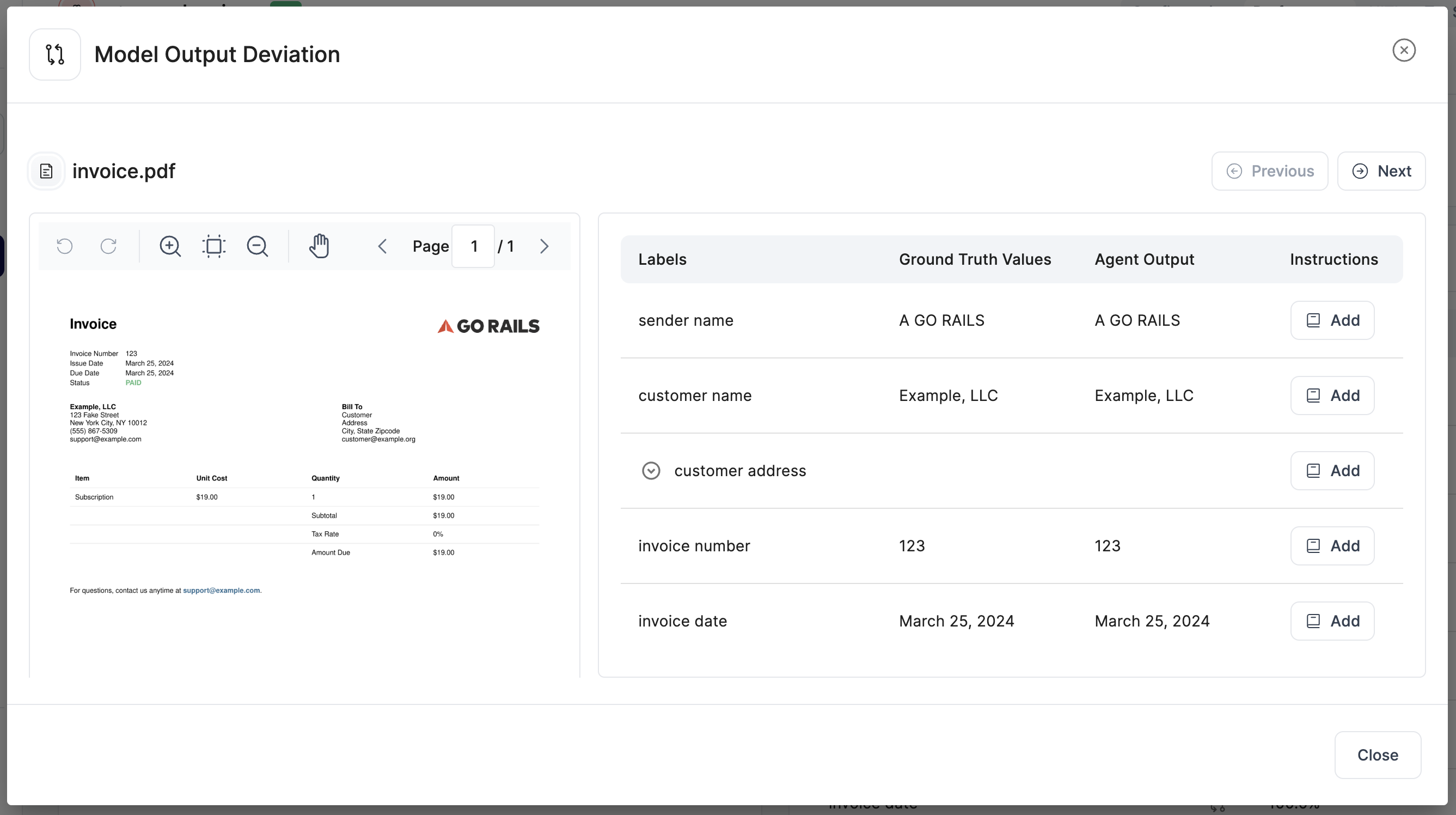

If you click on the 🔃 icon, you can see a detailed breakdown of the skill's performance. This view shows you the difference between the values extracted by the skill and your reviewed Ground Truth values.

You can modify the label instructions from this view.

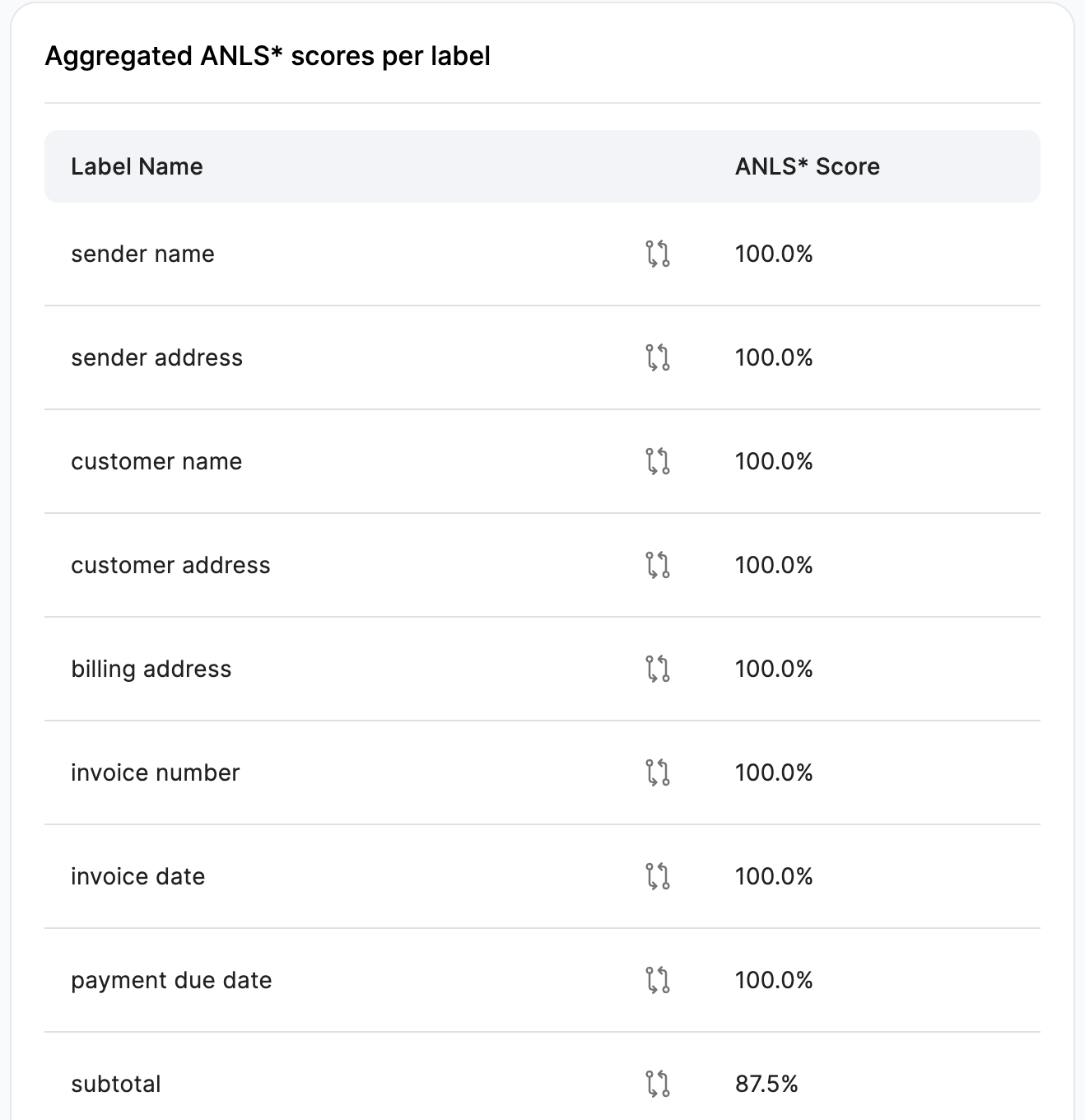

Aggregated ANLS* scores per label

This table contains a list of all your labels and their scores. The score represents the percentage of times the skill correctly extracted information for that label across all the documents you uploaded to Ground Truth.

If you click the 🔃 icon, you can see a detailed breakdown of the skill's output deviation for that specific label. This view shows you the difference between the skill's extracted values and your Ground Truth values for all the documents you've processed.

From here, you can also modify the label instructions.